Meta Platforms Inc., the parent company of Facebook and Instagram, is diving back into facial recognition technology by conducting trials aimed at bolstering security measures against scams. This move reflects a significant pivot from the company’s earlier decisions to distance itself from the controversies surrounding facial recognition. However, as Meta re-engages with this contentious technology, it seeks to address mounting criticism and challenges that have historically defined its approach to user privacy.

One of the foremost initiatives under testing is a facial matching system designed to counteract “celeb-baiting.” This fraudulent scheme leverages images of public figures to lure users towards deceptive advertising, often leading them to malevolent websites. In an effort to combat this issue, Meta’s approach centers on comparing faces used in advertisements to the official profile pictures of celebrities on its platform. If a match is found and it is determined the ad is malicious, Meta pledges to block it immediately.

This proactive methodology underscores Meta’s intention to safeguard users from exploitation. By using facial recognition as a tool to authenticate advertising content associated with prominent individuals, the company aims to enhance the integrity of its ad ecosystem. Yet, while the potential benefits seem clear, the application of such technology revives lingering questions about privacy and data security. The assurance that all facial data collected will be immediately deleted post-verification serves as a form of risk mitigation. However, skepticism remains, particularly from privacy advocates who fear potential misuse.

The backdrop of this renewed interest in facial recognition at Meta cannot be ignored. The company previously shuttered its facial recognition features in 2021 in the wake of public outcry and growing regulatory scrutiny. This step was part of a broader initiative aimed at redefining how it manages user data and privacy. Many thoughtrful critics raised alarms over how sensitive data could be weaponized if breached, making the prospect of reintroducing facial recognition particularly contentious.

Global applications of facial recognition technology have often raised ethical and legal concerns. In countries like China, it has been used for surveillance purposes, tracking citizens, and penalizing individuals for minor infractions like jaywalking. Such chilling examples of facial recognition’s potential misuse serve as cautionary tales for those operating in more liberal democracies, where citizen rights are more vigorously defended.

Moreover, the targeted tracking of marginalized groups based on facial recognition has underscored the inherent risks of such surveillance. This aspect is especially pressing for regulators in Western countries, who are charged with crafting policies that ensure the responsible use of technology. Given this heavy context, Meta’s trial could face heightened scrutiny, not only from governments but from civil liberties organizations as well.

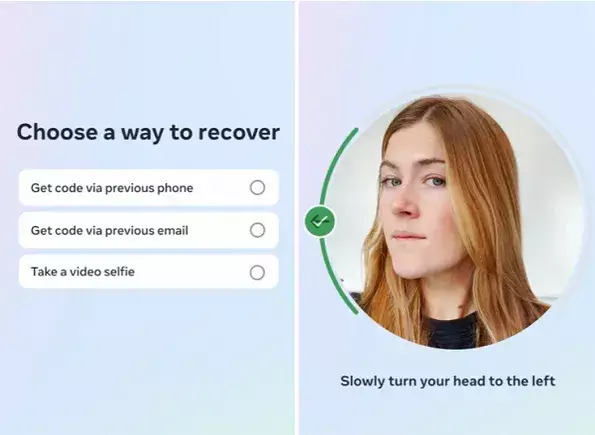

In a separate venture, Meta is also testing a new method involving video selfies for account verification. Users will upload a video of themselves, which will then be compared to their profile pictures to restore access to compromised accounts. This experience means to emulate familiar identity verification methods used in other applications, ensuring that users can regain access without encountering significant hurdles.

Although the company reiterates a commitment to keeping uploaded selfies secure and private—deleting data post-verification—this initiative once again shines a light on facial recognition’s re-emergence in daily user interactions. While it might provide convenience in accessing accounts, the intrusion of such systems into personal life might raise alarms for those who prioritize online privacy.

Meta’s current experimentation with facial recognition raises profound questions surrounding the balance between security and privacy. The dual trials of celeb-bait mitigation and identity verification reflect an earnest intent to resolve real issues within its platform. However, the implications of reintroducing facial recognition, even in limited ways, cannot be underestimated.

As the company seeks to expand its technological reach, it must contend with the scrutiny of stakeholders, including public watchdogs, regulators, and users themselves. Zuck and his team are undoubtedly acutely aware of the spotlight shining upon them in this reconfiguration of facial recognition technology. The prevailing discourse will focus on whether Meta can successfully navigate this path without falling back into the traps that previously marred its reputation.

All in all, while Meta’s new push may herald improvements in platform security, the shadow of potential misuse lingers. The company must handle these new trials with care, or risk reigniting public outrage and distrust. The pivotal challenge now lies in ensuring that advancements in technology do not come at the expense of user privacy and security.