In a rapidly evolving landscape of artificial intelligence, the emergence of innovative technologies is continually reshaping the sector. Chinese AI startup DeepSeek has recently advanced this evolution with the introduction of its latest release, DeepSeek-V3, an ultra-large language model that boasts a remarkable 671 billion parameters. By leveraging a unique mixture-of-experts architecture, this model aims to challenge established competitors in the field, thereby driving the aspirations towards creating artificial general intelligence (AGI).

Available on the Hugging Face platform, DeepSeek-V3 stands as a testament to the emerging power of open-source solutions. The design features a mixture-of-experts mechanism that intelligently activates only a subset of its vast parameters, enabling focused and efficient task execution. DeepSeek’s benchmarks indicate that the new model is setting new standards, outperforming notable open-source counterparts, including Meta’s Llama 3.1-405B. Moreover, it closely rivals the performance of proprietary systems such as those developed by Anthropic and OpenAI, highlighting significant progress in bridging the gap between open and closed AI technologies.

DeepSeek originally emerged as an offshoot of the quantitative hedge fund High-Flyer Capital Management, a background that appears to have nurtured its innovative approach toward AI development. As the company continues to evolve its technologies, it remains ambitious in regard to the future of AGI, which entails crafting systems capable of comprehending and executing a wide range of intellectual tasks akin to the complex capabilities of human cognition.

At its core, DeepSeek-V3 utilizes a multi-head latent attention (MLA) architecture, combined with their proprietary DeepSeekMoE system. This framework not only ensures robust performance but also optimizes the model’s efficiency by activating just 37 billion out of the 671 billion parameters for each token processed. Such a design philosophy enables DeepSeek to maintain high levels of model performance while minimizing resource consumption.

One of the standout features of DeepSeek-V3 is its auxiliary loss-free load-balancing strategy. This technique systematically monitors the operational load on the experts, ensuring that none are overburdened, which could compromise overall model performance. Additionally, DeepSeek-V3 introduces a multi-token prediction (MTP) capability, enhancing training velocity and yielding impressive outcomes, such as generating 60 tokens per second. These innovations are critical, as they mark a significant advancement in training methodologies and operational efficiencies.

The training of DeepSeek-V3 showcases a strong commitment to cost-effective innovation in AI. By employing advanced algorithms and hardware optimizations, including FP8 mixed-precision training and the DualPipe strategy for pipeline parallelism, DeepSeek has remarkably managed to cut training costs to around $5.57 million over a span of about 2,788 GPU hours. This figure starkly contrasts with the exorbitant expenditures typically associated with training expansive models, such as the more than $500 million estimated for the training of Llama 3.1.

Such reduced costs do not, however, equate to a compromise in performance. DeepSeek-V3 has already positioned itself as the preeminent open-source model on the market, demonstrating a powerful mix of affordability and high competency. The benchmarking results confirm that DeepSeek-V3 outshines its open-source rivals and even challenges the closed-source offerings, particularly excelling in parameters focused on the Chinese language and mathematical capabilities.

The emergence of DeepSeek-V3 underscores a transformative moment within the AI sector, where open-source models are closing in on proprietary alternatives, offering practically equivalent performance in various tasks. This trend is promising not only because it democratizes access to advanced AI technologies, but also because it propels competition within the field, diminishing the likelihood of any single company monopolizing AI capabilities.

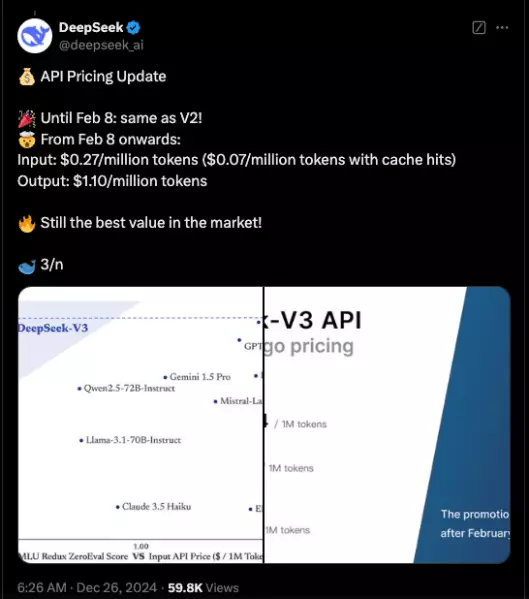

Debugging the current state of the industry, DeepSeek’s move to provide the model’s code via GitHub under an MIT license facilitates widespread experimentation and improvement of AI models across the board. Furthermore, the introduction of commercial APIs enables enterprises to access state-of-the-art tools, with initial competitive pricing designed to foster adoption among users.

As we look to the future, DeepSeek is not just creating models but a trajectory that holds the potential to reshape how AI is developed and deployed. This journey is likely to involve continued iterations and expansions of capabilities aimed at achieving AGI. DeepSeek’s innovations symbolize a significant step towards forging a versatile AI with a sustained capacity for learning and adapting—elements crucial for truly autonomous systems. The unfolding narrative of AI development indicates not only rapid advancements but also the vast possibilities ahead as we inch closer to establishing systems that can operate seamlessly within human contexts.