The rapid advancement of AI technology raises significant concerns about privacy and user autonomy. A notable episode in this ongoing struggle recently unfolded with Signal, a reputable messaging platform known for its staunch commitment to user privacy. In a critical move, Signal announced the launch of its new Screen Security feature designed to counter the intrusive capabilities of Microsoft’s AI-powered Recall feature. This response underscores the importance of prioritizing user privacy over tech advancements, shedding light on a precarious balance that is increasingly at risk.

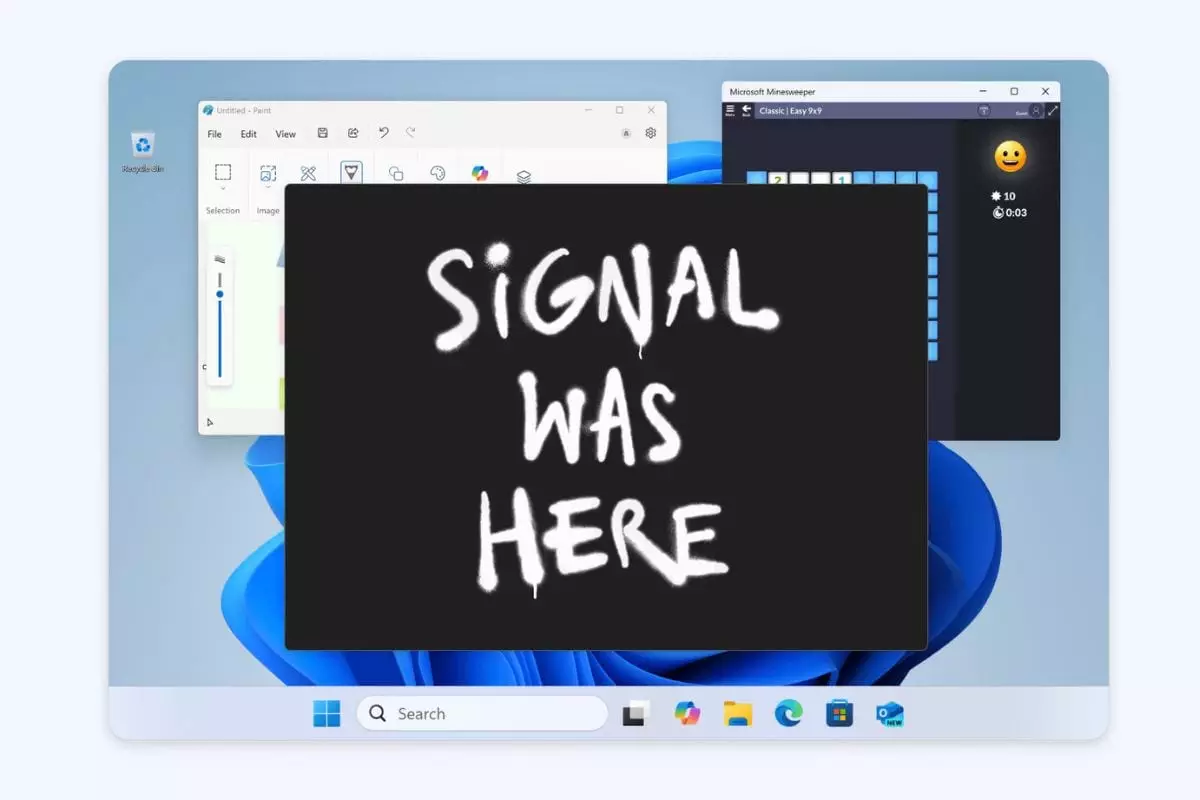

At its core, Signal’s introduction of Screen Security is much more than a defensive tactic; it highlights a philosophy rooted in user respect and vigilance against privacy infringements. With the Recall feature, Microsoft proposed a technology that continuously captures user activity, suggesting a paradigm shift in how personal data is treated. Users who rely on platforms like Signal deserve assurance that their private conversations remain confidential and unmonitored. By implementing Screen Security—an automatic screen capture blocker on Windows 11 devices—Signal reinforces its central mission: ensuring privacy in an AI-driven world.

The Dark Side of AI Features

While Microsoft has claimed that Recall is designed to enhance user experience by providing an AI with a comprehensive history of user activity, the potential for misuse is alarming. The backlash following its announcement stemmed from widespread concerns about privacy violations, especially when users were initially unaware of the extent of data being collected. Even after Microsoft pivoted, purportedly addressing these concerns by making Recall opt-in and refining its security features, many critics deemed these adjustments inadequate.

Signal’s frustration with Microsoft’s approach is palpable, particularly regarding the lack of developer tools for app creators to limit access to sensitive information. The absence of these controls renders messaging platforms vulnerable to invasive technologies. Signal’s new Screen Security feature thus emerges not only as a proactive defense mechanism but also as an urgent plea for a redesign of how technology companies treat user data. It brings to light an essential question: should the technological progression be prioritized over safeguarding user privacy?

Navigating the Accessibility Dilemma

Despite its unquestionable advantages, Signal’s Screen Security feature raises pertinent issues related to accessibility. The hardline stance on privacy has occasionally come at the expense of user experience, particularly for individuals relying on screen readers and magnification tools. By enabling such robust security measures, there is a risk that these devices may falter, inadvertently alienating a segment of users who heavily depend on assistive technologies.

However, Signal has skillfully anticipated this dilemma by allowing users to toggle the Screen Security feature off if accessibility needs dictate. This appears to be a thoughtful approach, allowing users to assert control over their privacy versus accessibility needs, though it remains critical that these users are adequately warned about the ramifications of disabling security settings. Signal’s transparency in communicating these options demonstrates a respect for user sovereignty, a hallmark trait of ethical engagement in technology.

Implications for the Future of Messaging and AI

The multilateral implications of these developments extend beyond one messaging app and one tech giant. Signal’s innovative response to Microsoft’s AI ambitions raises broader questions about digital rights in an era where invasive technology continues to proliferate. It serves as a clarion call for other apps in the space. Should they adopt more stringent privacy measures in response to share a similar fate and capitulate to AI advancements that threaten user confidentiality?

As we navigate the uncertain waters of AI, the call for deliberate, conscientious design in privacy-focused technologies becomes ever more essential. Tech companies must recognize their responsibilities to users, especially amid the relentless push for innovation. Importantly, they must also engage with and listen to feedback from advocates for privacy and digital rights in order to create secure and user-friendly solutions.

A future where privacy isn’t an afterthought but rather a primary design principle is palpable; however, it will require commitment and collaboration across the tech landscape. Signal’s proactive measures exemplify the kind of advocacy that will drive meaningful change and set a precedent for ethical technology moving forward.