As artificial intelligence becomes increasingly sophisticated, one issue arises prominently: how do we ensure these models provide accurate and reliable outputs? Much like people often consult experts for more reliable information, AI models can also benefit greatly from collaboration. Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have been addressing this challenge through a novel approach. Their algorithm, dubbed “Co-LLM,” represents a promising step in enhancing the accuracy of large language models (LLMs) by enabling them to collaborate dynamically.

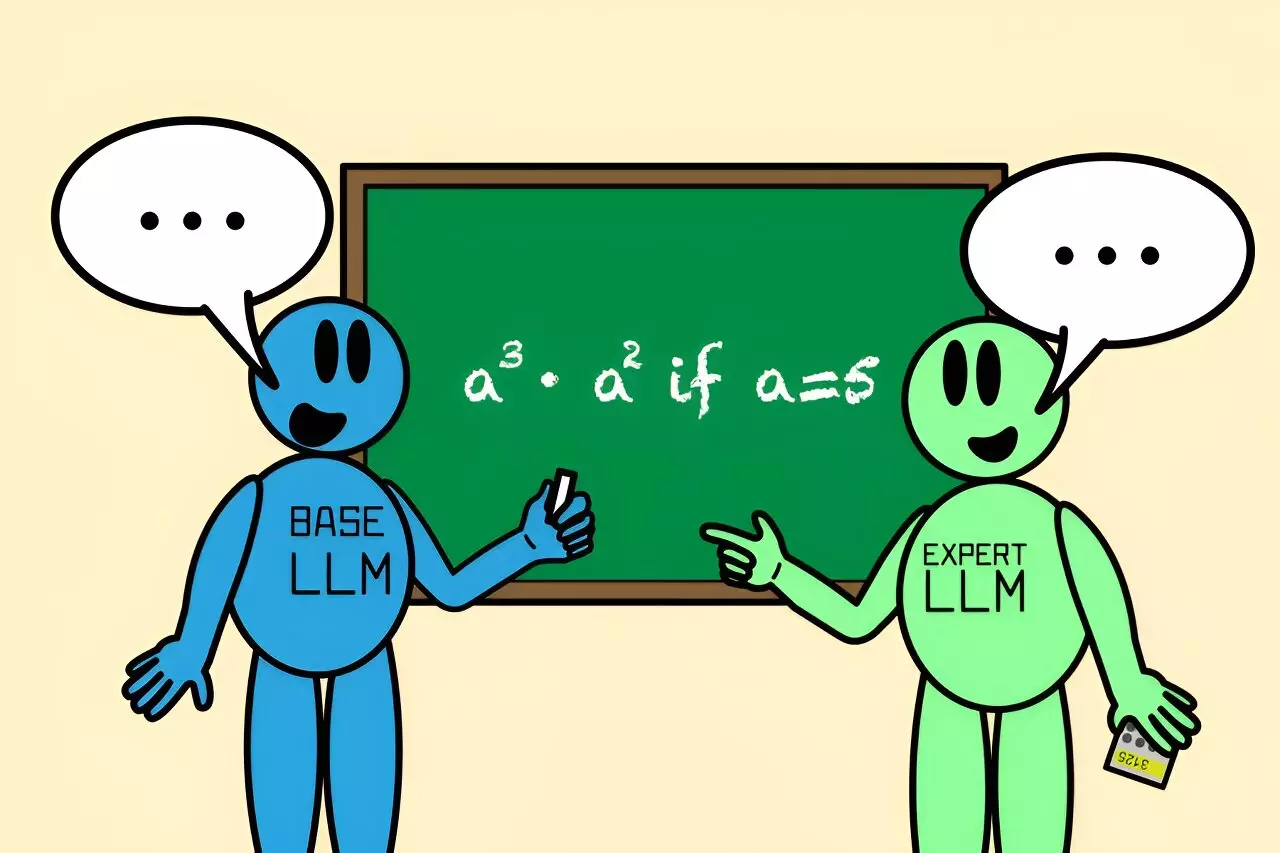

In situations where a general-purpose LLM encounters a query that requires specialized knowledge—like medical inquiries or complex mathematical problems—it may struggle to provide comprehensive answers. Instead of relying solely on the model’s built-in knowledge, allowing it to call upon a specialized expert model ensures that more accurate information is utilized. This mimics human behavior, where individuals often seek out knowledgeable peers or authorities when faced with challenging questions. While previous models predominantly relied on static structures and large data sets to determine when to consult an expert, Co-LLM introduces a more conversational and fluid method of collaborating with other models as needed.

The essence of Co-LLM lies in its ability to evaluate each word—or token—within the context of a response generated by a general-purpose LLM. As the primary model constructs an answer, Co-LLM utilizes a “switch variable,” a machine-learning-driven tool, to assess the competency of each token and determine whether it should involve the specialized model for improved accuracy. This carves out a path for a more fluid interaction between different AI entities, as the general-purpose LLM lays groundwork for the answer while the expert model fine-tunes specific parts.

Imagine querying the system about the extinction timeline of a bear species. While the general LLM might start drafting an answer, the switch variable could identify that details like data accuracy regarding extinction need expert input. Thus, Co-LLM seamlessly brings in the specialized model to complement the base responses. This collaborative dynamic not only enhances accuracy but also boosts efficiency—since expert models aren’t required at every step, lesser computational resources can be used for token generation.

The implications of Co-LLM’s architecture are fascinating. In the realm of biomedicine, for instance, the algorithm can be paired with models trained specifically on healthcare data, like the Meditron model. When tasked to identify components of a prescription drug or the underlying mechanisms of a disease, Co-LLM harnesses the specific expertise of these models to produce superior answers compared to a standalone LLM. In a similar vein, when solving complex math problems, using specialized models allows Co-LLM to adjust calculations dramatically, improving outputs that would otherwise be susceptible to error.

For example, one instance observed a general-purpose LLM erroneously solving an equation, while Co-LLM, with access to a math-specialized model, correctly computed the solution through collaborative input. The system thus proves not only effective in enhancing factual accuracy but also in providing context for double-checking uncertain answers.

The development of Co-LLM doesn’t stop there. One inspiring facet of the ongoing research is the potential for further refining the algorithm by integrating mechanisms for self-correction. Human users often backtrack when faced with incorrect information, and teaching the models to do something similar could allow Co-LLM to respond more robustly to errors, ensuring that each layer of knowledge remains as accurate as possible.

The implications extend beyond theoretical scenarios; real-world applications to enterprise settings are also being explored, where the latest knowledge can feed into dynamic documentation practices. This allows organizations to maintain current records effortlessly, skipping repetitive tasks associated with mundane data updates.

Colin Raffel, an authority in the field, remarked on Co-LLM’s innovative approach, underscoring its potential in revolutionizing the way models collaborate. By implementing token-level decisions regarding whether to consult an expert, Co-LLM not only enhances flexibility in AI responses but also streamlines AI collaboration into a more human-like interactivity. This research marks an essential leap towards more intelligent systems that can leverage expertise dynamically, heralding a promising future for collaborative AI applications across diverse domains. As researchers continue to refine these technologies, the hope is for a robust ecosystem of LLMs that can tackle increasingly complex inquiries with confidence and accuracy, transforming both AI and the human experience alike.