In an era where digital spaces have become an intrinsic part of young people’s lives, Meta’s recent initiatives reveal a proactive stance toward safeguarding its youngest users. Rather than passively relying on existing safety protocols, the social media giant is actively expanding features designed to educate, empower, and shield teenagers from online threats. These updates represent a pivotal shift from reactive moderation to a preventive, user-friendly approach that emphasizes awareness and ease of use.

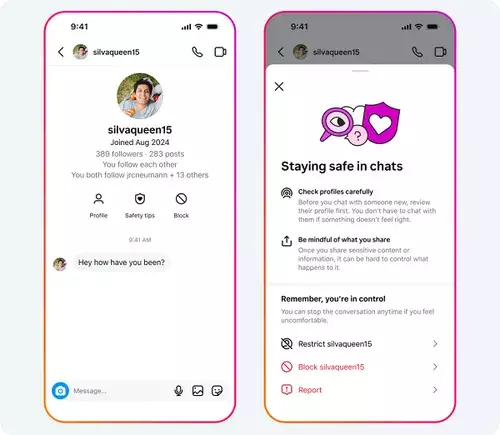

The introduction of “Safety Tips” within Instagram chats exemplifies this shift. By embedding quick-access guidance directly into conversations, Meta acknowledges the importance of timely, digestible information. This feature not only educates teens about common online risks, like scams or predatory behavior but also fosters a culture of vigilance that can be self-sustaining. When prevention tools are integrated seamlessly into the user experience, they become less intimidating and more instinctive—crucial for forming enduring safety habits among impressionable users.

Moreover, the streamlined blocking and reporting options underscore a deep understanding of teenage user behavior. Recognizing that complexity can hinder action, Meta’s combined block-and-report function simplifies the process, making it more likely that teens will respond promptly to suspicious interactions. Such tools are not just about technical control; they send a message that their online well-being is prioritized, potentially reducing feelings of helplessness when faced with problematic encounters.

Addressing Deep-Rooted Challenges with Strategic Transparency

Meta’s efforts extend beyond direct safety features to tackling broader threats such as grooming and exploitation, especially concerning adult-managed teen accounts. The platform’s transparency about removing tens of thousands of accounts involved in sexually inappropriate activities demonstrates a no-compromise approach to safeguarding minors. This acknowledgment is crucial, as it highlights both the scale of the problem and the company’s commitment to addressing it head-on.

However, these measures raise uncomfortable questions about the pervasiveness of such threats and the effectiveness of current detection practices. While deleting accounts is necessary, it remains a reactive measure that may be insufficient if underlying social and technological vulnerabilities persist. Meta’s assertion that more than half a million related accounts were also removed suggests a vigilant and dynamic response, yet it underscores the importance of ongoing innovation in preventive technology.

In tandem with these efforts, Meta’s expansion of protections—such as restricting who can message minors, employing nudity filters, and alerting users about location sharing—creates multiple layers of defense. While these are important, their efficacy ultimately depends on consistent user engagement and education. Merely having features is insufficient; teens must understand and actively utilize these tools for meaningful safety gains.

Balancing Regulation and Corporate Responsibility

An intriguing aspect of Meta’s strategy involves supporting regulatory efforts to restrict social media access based on age. Policy proposals in the European Union advocating for a minimum age of 15 or 16 reflect a recognition that platform controls alone cannot fully shield vulnerable users. By endorsing these initiatives, Meta arguably aligns itself with a broader societal push toward responsible digital consumption, although such support may also serve strategic interests.

It’s worth questioning whether Meta’s backing for higher age thresholds is primarily driven by genuine concern or by a desire to mitigate regulatory scrutiny. Given its extensive financial and brand stakes, the company’s stance might be a pragmatic effort to shape policy in a way that balances user safety with corporate flexibility. Regardless, the move indicates an acknowledgment that platform-level protections must be complemented by societal and legislative actions—the kind of multi-pronged approach necessary in today’s complex digital landscape.

Finally, these developments speak to a broader reality: online safety for teenagers is an ongoing challenge rather than a one-time fix. Meta’s evolving suite of protections and transparency initiatives suggest a recognition that digital literacy, user agency, and regulatory frameworks must work harmoniously. As platforms continually adapt to new threats and societal expectations, the onus remains on tech giants to lead with innovation, accountability, and a genuine commitment to those most vulnerable—teenagers navigating an increasingly complex digital world.