In a landscape where robotics is continually evolving, a groundbreaking study by the German Aerospace Center’s Institute of Robotics and Mechatronics shines a light on the future of robotic sensation. Traditional approaches often relied heavily on artificial skin to mimic human touch, but this innovative team has forged a new path, integrating internal sensors with machine-learning algorithms. This unique methodology not only offers a fresh perspective on tactile sensing but also paves the way for enhancing human-robot interactions across various environments.

The Essence of Touch: A Two-Way Street

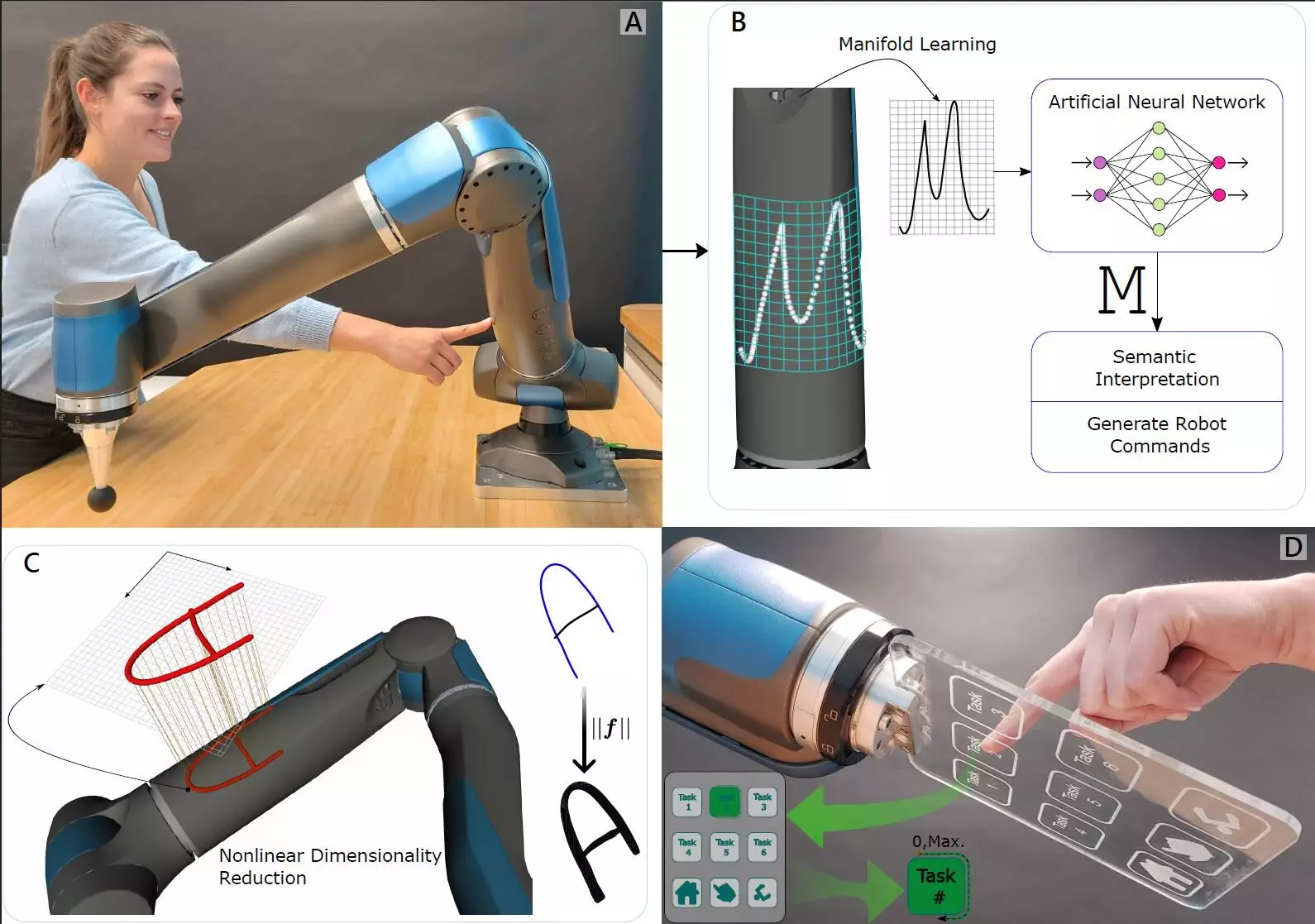

Understanding touch is fundamental to creating robots that can engage with their surroundings as humans do. Touch is inherently interactive; it involves both sensing external stimuli and responding to them. By utilizing force-torque sensors, the researchers captured the essence of this interaction, allowing robots to experience touch from external forces. Their work emphasizes that touch isn’t merely about feeling something; it’s also about being affected by another object. This dual approach enriches the sensory experience robots can achieve, making them more responsive and effective in real-world applications.

The Mechanisms Behind This Innovation

At the heart of the research lies the combination of sensitive force-torque sensors strategically placed in the joints of a robotic arm. These sensors detect pressure from multiple vectors, enabling the robot to gauge how and where it is being touched. Coupled with a sophisticated machine-learning algorithm, the robotic system becomes adept at interpreting various sensations associated with touch. Unlike previous technologies that required an entire robot to be enveloped in sensory material, this method simplifies the approach while enhancing the robot’s functionality. The sophistication of AI plays a crucial role, transforming raw pressure data into meaningful interpretations, allowing the robot to differentiate between different touch stimuli.

Real-World Applications and Implications

The implications of this research are tremendous, particularly in industrial settings where robots and humans collaborate closely. By equipping robots with the ability to discern different types of touch, we unlock a wealth of possibilities for safer and more intuitive interactions. In environments where precision is paramount, such as manufacturing or healthcare, a robot’s capability to feel subtle pressures can lead to breakthroughs in how tasks are performed, ensuring that human workers are safe and that operations are conducted smoothly.

Moreover, the ability for robots to identify specific points on their structure in response to touch can lead to advancements in assistive technologies. Robots could be programmed to respond not just to tasks but also to understand and interpret human intent based upon physical interactions. The emotional response associated with touch—trust, reassurance, and even companionship—may eventually transition into the robotic world, influencing the design and function of future robotic systems.

The intersection of robotics, machine learning, and a refined understanding of touch signals a new era of intelligent interaction. This innovative approach not only enhances robotic sensitivity but deepens the potential for collaborative work between humans and machines. As technology progresses, the dynamics of these interactions will undoubtedly evolve, leading to a future where robots not only assist but enhance the human experience through enriched tactile engagement.