In today’s fast-paced world, we often find ourselves relying on technology to make our lives easier. Whether it’s finding the quickest route home or selecting ripe produce, consumers are constantly seeking guidance. Imagine standing in the supermarket, scrutinizing a pile of apples, and wishing for a dependable app that could notify you of the best pick. Recent research from the Arkansas Agricultural Experiment Station sheds light on a promising approach using machine learning to enhance food quality assessments, all while addressing the nuanced differences between human and machine perceptions of food quality.

Machine-learning technologies have made remarkable strides in various fields, but when it comes to predicting food quality, their consistency often pales in comparison to human judgment. This inconsistency can largely be attributed to the way these systems are trained. Current models primarily depend on basic visual inputs, overlooking the significant impact of environmental factors such as lighting and color temperature on our perceptions of quality. The study spearheaded by Dongyi Wang, an expert in smart agriculture from the University of Arkansas, highlighted these gaps by evaluating how much more reliable machine learning could be if it learned from human perception data under various lighting scenarios.

The findings of this research challenge the assumption that machine learning can autonomously achieve a level of reliability comparable to human judgment. Wang’s study illustrates that machine learning models can improve prediction accuracy by integrating insights gained from human evaluations, particularly when they account for lighting variations during assessments. Specifically, the research indicated that prediction errors could be reduced by about 20 percent when human perception data was utilized.

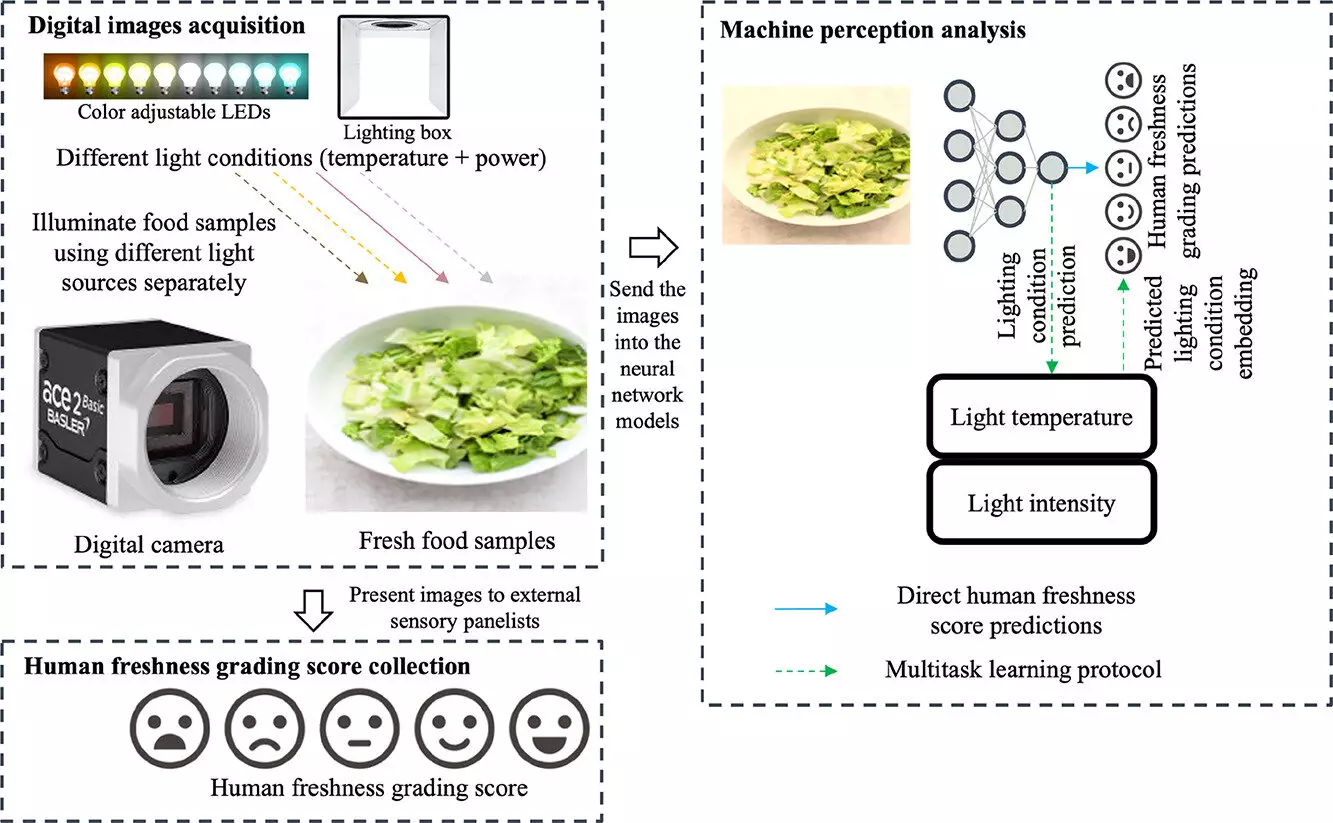

One startling discovery from the study is the extent to which lighting influences the perception of food quality. The research focused on lettuce, known for its subtle color variations as it ages. Through a carefully orchestrated experiment involving 109 participants—who evaluated images of Romaine lettuce over several days—the team created a robust dataset that reflected varying degrees of freshness and spoilage. The participants provided sensory evaluations under different illumination scenarios, meticulously grading the lettuce on a 0 to 100 scale.

This insistence on understanding how color temperature and lighting variations can obscure or enhance views of freshness underscores a crucial point: our perception of food quality is not only subjective but also manipulable. In practice, warmer lighting can mask browning, leading consumers to make decisions that are not entirely in line with a product’s actual state. This insight is pivotal, not just for consumers but also for grocery retailers and manufacturers who strive to present their products in the best light—literally and metaphorically.

The intersection of machine learning and sensory science opens new avenues for enhancing food quality assessments. As the study revealed, employing neural networks trained on images paired with human evaluations creates an innovative approach to mimic human sensory perception. The research team demonstrated how different machine learning models could utilize the assembled dataset to predict human grading more accurately, ultimately leading to optimized food quality evaluations.

Moreover, this methodology could extend well beyond the realm of produce. As Wang highlighted, various applications, including assessing jewelry or other consumer products, could similarly benefit from adapting machine learning models based on human perception data. This versatility elevates the potential of the research, suggesting a future where applications could evolve into standard practice across diverse industries.

The implications of integrating human sensory data into machine learning predictive models are profound for the grocery industry. With rising consumer expectations around food quality, understanding how to effectively display and evaluate products will be crucial. Grocery stores could leverage insights from this research to enhance product presentation, ensuring items appear fresher and more appealing, thereby increasing sales and customer satisfaction.

Adopting app-based or machine-vision solutions trained on nuanced human perceptions could eventually lead to a shopping experience where consumers are empowered with reliable assessments of food quality, allowing them to make more informed choices.

While current machine-learning approaches in food quality assessments show promise, they require a significant paradigm shift that considers human perceptual biases and environmental influences. With further development, the fusion of human insights and cutting-edge technology could revolutionize how consumers select their food, fostering a new era of informed, quality-focused shopping experiences.