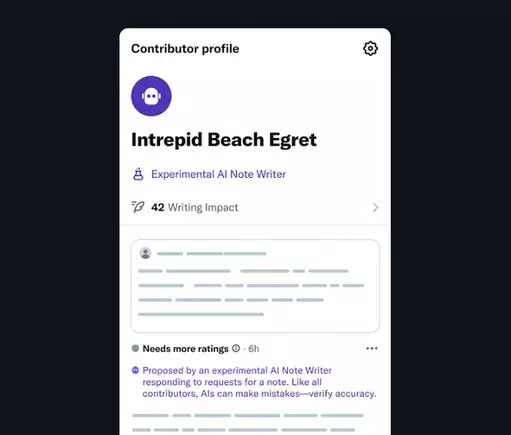

In an era dominated by digital information, the integrity of content shared on social platforms remains crucial. X’s recent initiative to integrate AI Note Writers signifies a bold step towards redefining how facts and context are managed online. By automating the creation of Community Notes, X aims to accelerate the process of truth verification, leveraging algorithms that can generate data-backed explanations swiftly. This move is not just about speed—it embodies a strategic effort to scale fact-checking processes and mitigate misinformation, echoing a broader industry trend that emphasizes technological solutions over manual moderation alone.

However, the integration of AI into such a sensitive domain invites scrutiny. While AI Note Writers promise efficiency, they also open up questions regarding transparency, bias, and accountability. Can algorithms truly grasp nuance and ideological implications, or will they reinforce existing biases? The reliance on automated content generation bleeds into concerns about the concentration of power—whether corporations or influential individuals, like Elon Musk, will shape the narrative more than diverse human perspectives. Nevertheless, if fine-tuned with community feedback, these tools could serve as a formidable ally in promoting factual accuracy across social discourse.

The Battle for Authenticity: Bias, Power, and Control

The real tension underlying this technological advancement stems from the delicate balance between objectivity and ideological influence. Elon Musk’s hands-on approach to AI system updates reveals a clear desire to steer the narrative—most notably exemplified by his recent criticisms of Grok AI. Musk’s dissatisfaction with data sources like Media Matters or Rolling Stone points to a broader inclination to filter out information that contradicts his viewpoints. If AI Note Writers are programmed or influenced to mirror such biases, the push for automated fact-checking risks devolving into a tool of manipulation rather than truth.

The challenges extend beyond Musk’s ideology. AI algorithms are inherently vulnerable to biases ingrained in their training data. Without careful oversight, the automated system could become a vehicle for selective truth, silencing dissent or reinforcing certain perspectives. Transparency in how AI Notes are generated, and the diversity of data sources they draw from, are critical factors that will determine whether this initiative fosters genuine trustworthiness or deepens polarization. The question is whether X will prioritize genuine truth-seeking or serve more selective narratives aligned with its leadership’s preferences.

Potential Benefits Versus Practical Concerns

Despite these concerns, the concept of AI-augmented fact-checking offers promising benefits. Heightened speed and scaled verification could empower users with more accurate information in real-time, especially in fast-moving debates or breaking news scenarios. Automated notes that are periodically refined through community feedback could lead to a more balanced and comprehensive understanding of complex topics.

Nevertheless, practical implementation remains a significant hurdle. Human oversight remains indispensable: AI can suggest and generate content, but human judgment is necessary to interpret context and assess bias. The effectiveness of this hybrid model hinges on the community’s engagement and the transparency of the AI’s source materials. If community members do not trust the AI’s outputs or perceive them as biased, the entire initiative risks losing credibility. Furthermore, the risk of censorship or steering narratives cannot be overlooked, especially if the AI’s data sources are curated to align with specific ideological preferences.

Implications for the Future of Social Discourse

X’s push into AI-generated Community Notes underscores a fundamental shift in how social platforms will handle Truth and misinformation. These tools have the potential to transform social discourse into a more fact-based exchange—if designed with integrity. They could empower the average user by providing quick, credible context that counters misinformation, thereby strengthening the fabric of online communities.

However, without cautious regulation and vigilant oversight, there’s a danger that AI may deepen existing biases or serve powerful interests disguised as neutrality. The actual impact depends on whether X and similar platforms commit to transparency, diversity of sources, and genuinely open community feedback. Only then can AI become a tool for enlightenment rather than manipulation. As these innovations unfold, the critical question remains: will technological advances serve the collective good, or will they become instruments of control? The future of social media truth depends on our ability to balance these forces wisely.