The move toward integrating AI functionalities into social media platforms has sparked a whirlwind of discussions, particularly regarding user privacy. Recently, Meta announced its plan to introduce AI features that would process users’ private direct messages (DMs) across its platforms, namely Facebook, Instagram, Messenger, and WhatsApp. This change raises significant concerns about data security, user consent, and the overall value of AI in personal communication.

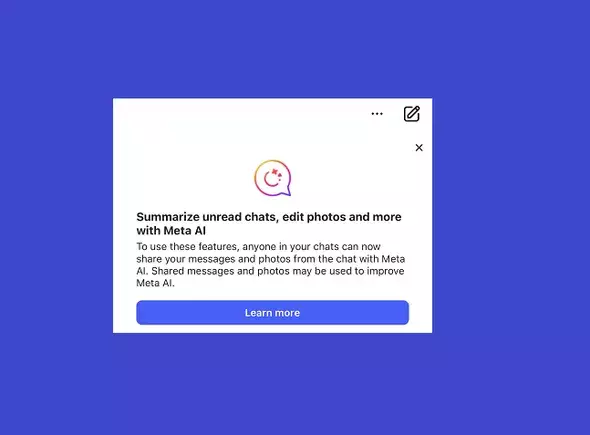

Meta’s chat AI features allow users to summon AI assistance directly within their conversations. When users initiate an interaction by tagging @MetaAI, they can ask questions related to various topics. While this adds a layer of convenience, it simultaneously invites scrutiny regarding the data handling practices that come with such an integration. Meta has acknowledged this potential issue by providing warnings within the app, reminding users to refrain from sharing sensitive personal information such as passwords or financial details during conversations that might involve AI.

This process is not purely innocent; it raises the question of what constitutes “sensitive information.” The boundaries of privacy are increasingly fluid, and users might unknowingly disclose data that could be misappropriated if it enters the AI training module. The implications of this on user trust are profound. Users are generally unaware of the specifics of data usage policies, often glossing over the lengthy terms and conditions they must accept to use the service.

The core value proposition of incorporating Meta AI in chats appears fundamentally flawed. Although it presents a seamless way to access AI assistance, the minor benefits are overshadowed by the risks it entails. Users already have the option to communicate with AI through separate chats without jeopardizing the confidentiality of their private discussions. Thus, the integration of AI within direct messaging seems unnecessary and potentially hazardous to personal privacy.

Moreover, Meta’s disclaimer regarding the handling of personal identifiers essentially shifts the burden onto the users. It subtly implies that individuals should bear the responsibility for managing what they share in conversations involving AI. Such a stance could deter users from engaging with the feature altogether, as the awareness of possible repercussions may lead them to err on the side of caution.

The system’s reliance on tacit consent raises further red flags. Users are informed that they have already granted Meta the right to utilize their information upon agreeing to the lengthy user agreement. This situation illustrates a significant oversight in the power dynamics between large tech companies and everyday users. While it is undoubtedly within Meta’s rights to use this data as outlined, the ethical dimension of such practices is murky.

For an informed user base, features that require substantial trust should ideally operate on explicit consent. Many users may not fully comprehend the gravity of what they consent to, which is problematic, especially when companies like Meta wield such considerable power over their personal data. The paradigm needs to shift from passive acceptance to active acknowledgment of data usage, ensuring that users have a genuine understanding of how their information is utilized.

As Meta rolls out this ambitious AI integration, the social media giant must tread carefully in balancing innovation and user privacy. Users who wish to maintain their privacy could continue using alternative methods of AI engagement, such as dedicated chats outside the secure environment of their direct messages. Nonetheless, the allure of convenience could lure them into a false sense of security.

It’s crucial for consumers and advocates of digital rights to remain vigilant and demand clearer, transparent practices that prioritize user consent. The evolving landscape of artificial intelligence calls for rigorous attention to data ethics, compelling companies to reconsider how they interact with user data. As the realm of AI continues to develop, the conversation about privacy in private communications can’t just be an afterthought; it must be front and center, advocating for the right of users to control their information autonomously.