The machine learning landscape is undeniably dominated by large language models (LLMs) such as those developed by OpenAI, Meta, and Google. These intricate systems exhibit incredible capability, powered by hundreds of billions of parameters that serve as their adjustable mechanisms. The efficacy of an LLM lies in its ability to tirelessly sift through massive datasets and identify complex patterns, building an intricate web of connections. However, with this monumental power comes significant costs—not merely financial but also environmental. Each operation, each query, demands vast computational resources, rendering these models energy-intensive. For instance, Google’s investment of $191 million in training its Gemini 1.0 Ultra model illustrates the staggering amounts of capital required to push boundaries in artificial intelligence.

As the technological community grapples with the ethical implications of such energy consumption, the spotlight is shifting towards a new paradigm: small language models (SLMs). These models, often containing a mere couple of billion parameters, are quickly emerging as versatile tools, adept at handling specific tasks while sidestepping the environmental and resource concerns tied to their larger counterparts.

The Benefits of Going Small: Targeted Efficiency

While LLMs may be suitable for broad-scale applications like conversation generation or even image synthesis, SLMs are realizing their potential in more narrowly defined arenas. Tasks such as summarizing conversations, answering patient queries in healthcare settings, or managing data from smart devices illustrate the specialized utility of these smaller models. According to Zico Kolter, a computer scientist at Carnegie Mellon University, an SLM with just 8 billion parameters can effectively deliver satisfactory results for many applications. The ability to run these models on personal computers or smartphones, as opposed to relying on enormous data centers, makes them not just efficient but accessible.

This accessibility is not merely a matter of practicality; it is also rooted in innovative methodologies that researchers employ to maximize the potential of SLMs. Rather than relying on vast, chaotic datasets from the internet that large models typically consume, researchers have developed methods like “knowledge distillation,” where an accomplished teacher model imparts its understanding to a smaller student model. This pairing allows small models to benefit from high-quality data, resulting in superior performance even with limited parameters.

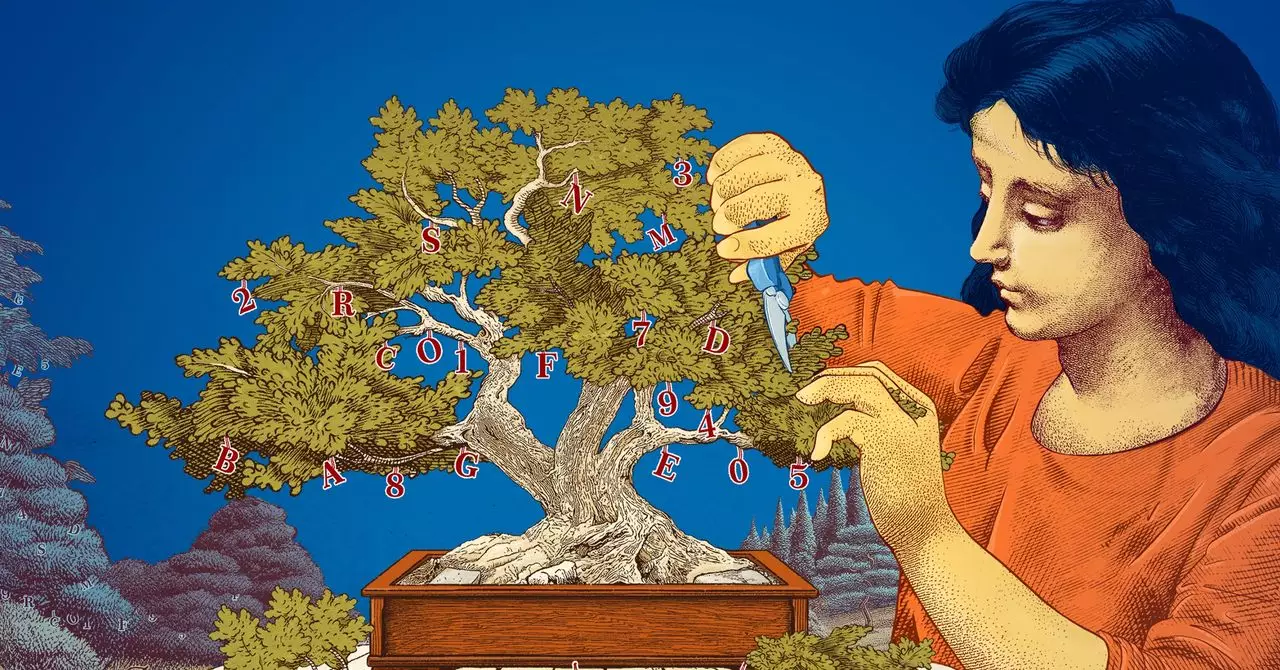

Engineering Brilliance: Pruning for Precision

The process of refining language models does not stop at knowledge distillation. Another significant innovation in the field is model pruning—essentially trimming away the unnecessary connections within a neural network to enhance efficiency. This method takes inspiration from the human brain, which becomes more streamlined over time as certain synaptic connections fade, demonstrating the elegance of evolution in action. The seminal work by Yann LeCun in 1989 revealed that, astonishingly, up to 90% of a model’s parameters could be discarded without diminishing its efficiency. This concept, dubbed “optimal brain damage,” emphasizes that less can indeed be more when it comes to language model design.

For researchers eager to delve into novel applications and test experimental concepts, small models offer a cost-effective and less risky experimental environment. As Leshem Choshen from the MIT-IBM Watson AI Lab notes, the dynamic nature of smaller models allows for exploration and creativity, paving the way for innovations previously stymied by the intimidating complexity and expense of larger models. This openness fosters an environment where new ideas can flourish with less financial strain and lower stakes.

Future Perspectives: Carving a Niche for Small Models

Nevertheless, while large models may still hold sway in complex applications requiring expansive capabilities—think generalized chatbots and intricate image creation—the role of SLMs is increasingly crucial for many practical tasks. Smaller models not only offer significant savings in terms of time and money but also reduce the computational resources needed, shifting the focus from unbridled growth to efficient functionality.

In an age where the balance between technological advancement and environmental stewardship is more critical than ever, the movement towards SLMs could very well represent a pivotal shift in the artificial intelligence landscape. Through targeted applications and refined methodologies, smaller models are proving that effective machine learning does not always have to come at a hefty price. As researchers continue to innovate, the future holds the promise of making AI accessible, efficient, and environmentally friendly, heralding a new era of intelligent technology that aligns more closely with sustainable practices.