In the realm of artificial intelligence, significant breakthroughs have been witnessed, particularly in recent years. Despite this rapid advancement, robots still lag in terms of cognitive sophistication. The prevalent types of robots utilized in industrial settings—such as factories and warehouses—often function on rigidly predefined tasks. These machines are designed for precision and efficiency, yet they lack the vital ability to perceive their environment dynamically or adapt their actions in real-time. This limitation stems from a fundamental deficiency in what can be described as “general physical intelligence,” which is necessary for a broader understanding and manipulation of their surroundings.

The need for more capable robotic systems becomes apparent when we consider the vast array of tasks that could potentially be automated. A more adept robot would not only handle repetitive industrial work but could seamlessly integrate into more chaotic and diverse environments, such as homes. The ongoing excitement surrounding advancements in artificial intelligence has consequently fueled optimism in the potential of robotics. Visionaries like Elon Musk are pushing boundaries with projects like Tesla’s Optimus—a humanoid robot that Musk claims may revolutionize domestic labor by 2040, selling for around $20,000 to $25,000. This ambitious prospect, however, hinges on overcoming the inherent limitations currently faced by automation.

Traditional methods of training robots generally focus on specific tasks performed by individual machines. This fragmentation in learning has inhibited the transfer of knowledge between robot platforms, amplifying the challenge of creating adaptable systems. However, recent advancements in research have illuminated pathways for more versatile learning models. For instance, the Open X-Embodiment project, led by Google in 2023, sought to enrich robotics by facilitating shared learning among 22 different robots across various research laboratories. This innovative approach signifies a pivotal step towards ensuring that robots acquire broader competencies through collaborative learning.

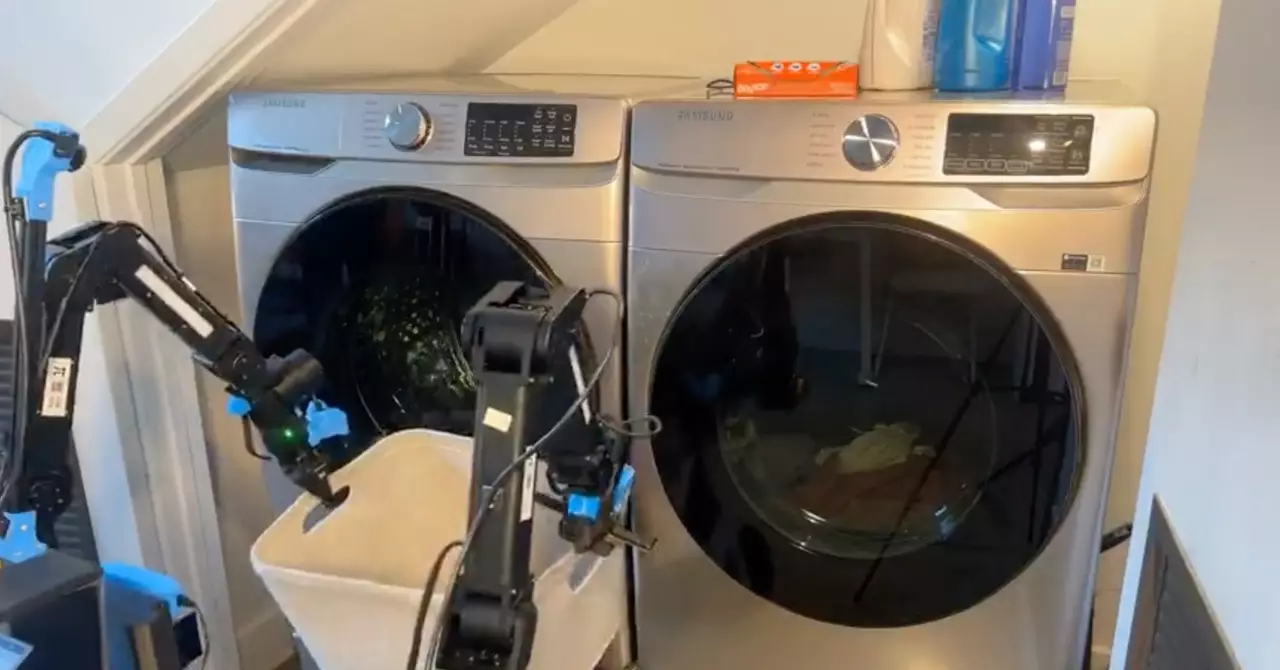

A significant barrier remains in the disparity of data availability between robotic training and existing large language models, which benefit from extensive textual datasets. Unlike these models, robotics lacks such volume, necessitating the generation of proprietary datasets for training purposes. Companies like Physical Intelligence are pioneering strategies to tackle this limitation. By integrating vision-language models—capable of interpreting both imagery and text—with diffusion modeling techniques from AI-driven image generation, these innovators aim to foster a more versatile learning framework for robots.

The ambitious goal of enabling robots to perform various chores on demand underscores a pressing need for scalable learning mechanisms. As companies persist in refining their methodologies, the journey toward genuinely intelligent robotics is fraught with challenges. As Levine aptly points out, while notable progress has been made, it is evident that significant advancements are required before we can achieve a truly adaptive robotic workforce. Ultimately, the potential for robots to operate seamlessly in various contexts remains tantalizingly within reach, yet the journey is still at its infancy. The scaffolding of progress is in place; it simply awaits the next leap forward.